As aspiring lawyers everywhere learned with horror that GPT-4 is capable of passing the bar exam (in the 90th percentile of test-takers, no less) a whole bunch of academics were not-so-quietly putting GPT-3 to the test on accounting. Literally.

Published in Issues in Accounting Education, a total of 328 authors from 186 different institutions in 14 countries sought to answer the question: how well does ChatGPT answer accounting assessment questions? TL;DR Not great, Bob. But not bad either.

Abstract:

ChatGPT, a language-learning model chatbot, has garnered considerable attention for its ability to respond to users’ questions. Using data from 14 countries and 186 institutions, we compare ChatGPT and student performance for 28,085 questions from accounting assessments and textbook test banks. As of January 2023, ChatGPT provides correct answers for 56.5 percent of questions and partially correct answers for an additional 9.4 percent of questions. When considering point values for questions, students significantly outperform ChatGPT with a 76.7 percent average on assessments compared to 47.5 percent for ChatGPT if no partial credit is awarded and 56.5 percent if partial credit is awarded. Still, ChatGPT performs better than the student average for 15.8 percent of assessments when we include partial credit. We provide evidence of how ChatGPT performs on different question types, accounting topics, class levels, open/closed assessments, and test bank questions. We also discuss implications for accounting education and research.

Here are the specifics on the study:

During the months of December 2022 and January 2023 each coauthor entered assessment questions into ChatGPT and evaluated the accuracy of its responses. The study includes a total of 25,817 questions (25,181 gradable by ChatGPT) that appeared across 869 different class assessments, as well as 2,268 questions from textbook test banks covering topics such as accounting information systems (AIS), auditing, financial accounting, managerial accounting, and tax. The questions vary in terms of question type, topic area, and difficulty. The coauthors evaluated ChatGPT’s answers to the questions they entered and determined whether they were correct, partially correct, or incorrect.

Across all assessments, human students scored an average of 76.7 percent, while ChatGPT scored 47.4 percent based on fully correct answers and an estimated 56.5 percent if partial credit was included. BUT…

[W]e also find that ChatGPT scored higher than the student average on 11.3 percent (without partial credit) or 15.8 percent (with partial credit) of assessments. The study also revealed differences in ChatGPT’s performance based on the topic area of the assessment. Specifically, ChatGPT performed relatively better on AIS and auditing assessments compared to tax, financial, and managerial assessments. We suggest one possible reason this may occur is that AIS and auditing questions typically do not include mathematical type questions, which ChatGPT currently struggles to answer correctly.

Can relate.

ChatGPT shined on true/false and multiple choice questions, with full-credit accuracy rates of 68.7 percent and 59.5 percent, respectively. Where it struggled was with workout and short-answer questions with accuracy rates of 28.7 percent and 39.1 percent, respectively. ChatGPT answered textbook bank questions correctly 64.3 percent of the time, doing especially well on audit questions (83.1 percent correct) and AIS (76.8 percent correct).

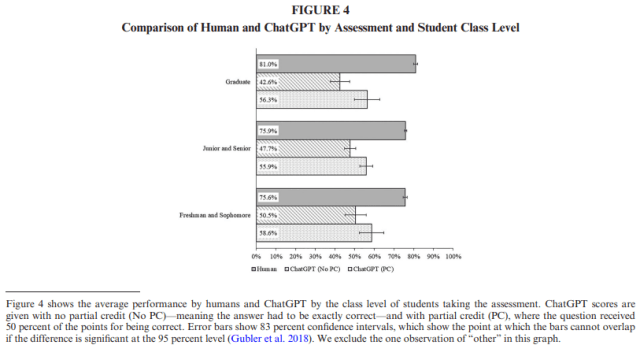

Some charts of humans vs. AI:

Given the unique nature of the crowdsourced data collection process, the paper’s authors offered some anecdotes that individual authors found interesting and wished to highlight. There are many of these anecdotes in the paper, since most of you are checked out by this point in the article I’m including only a few.

- During testing, ChatGPT did not always recognize it was performing mathematical operations and made nonsensical errors, such as adding two numbers in a subtraction problem or dividing numbers incorrectly. This is especially problematic for workout problems.

- ChatGPT often provided descriptive explanations for its answers, even if they were incorrect. This raises the important question about how its authoritative, yet incorrect, responses may impact students. Similarly, at times ChatGPT’s descriptions were accurate, but its selection of multiple-choice answers was incorrect.

- ChatGPT sometimes “made up” facts. For instance, when providing a reference, it generates a real-looking reference that is completely fabricated—the work, and sometimes authors, do not even exist.

- ChatGPT could generate code and find errors in previously written code. For example, given a database schema or flat file, ChatGPT could write correct SQL and normalize the data.

- If unable to directly generate answers, ChatGPT could provide detailed instructions to complete a question. For instance, it could provide steps on using a software tool or sample code to solve problems that require access to a specific database.

- In a case study context, ChatGPT was able to provide responses to questions based on assessing past strategic actions of the firm. However, where data was required to be used, ChatGPT was unable to respond to the questions other than providing formulas. ChatGPT performed even worse where there was a requirement for students to apply knowledge. This highlights that ChatGPT is a general-purpose tool as opposed to an accounting specific tool. It is not unsurprising, therefore, that students are better at responding to more accounting-specific questions where the technology is not yet trained to answer accounting-specific questions.

In the conversation about AI replacing accountants, the current consensus is that it is best suited for repetitive tasks of the sort monkeys (and interns) can do and not higher-level thinking, which aligns with what researchers uncovered. They pointed out that ChatGPT — the GPT stands for “General Purpose Technology” — was not specifically trained on accounting content and therefore may not perform well on it “as faculty and textbook authors often design questions to elicit nuanced understanding from accounting students, which may not be comprehended by AI algorithms.” Turns out your professors weren’t being dicks for no reason by throwing stumpers at you but rather trying to train you to think critically.

Before I wrap this up, the researchers offered a bit of sage advice to accounting educators I feel compelled to pass along:

Perhaps the most important contribution of this paper is to highlight that accounting educators need to prepare for a future that includes broad access to AI to serve their students and the needs of the profession effectively. We believe that accounting educators should engage in discussions about the impact of AI on their teaching. This includes addressing questions such as: How should students be allowed to use AI? What material should be memorized versus referenced? Can interactions with AI enhance students’ learning, and if yes, how? What value do educators and accountants provide beyond what AI can provide? These are all important questions that accounting educators should discuss and research. As AI technology continues to improve, educators need to prepare themselves and their students for the future, making AI technology a promising area for future research.

The ChatGPT Artificial Intelligence Chatbot: How Well Does It Answer Accounting Assessment Questions? [Issues in Accounting Education Volume 38, Number 4, 2023]